TOP 2%

AGENTIC ENGINEERING

A roadmap for becoming a top 2% agentic engineer in 2026

Written by

@IndyDevDan

Compute co-authors: Claude Opus 4.6 & Gemini 3.1 Pro

"Want to do more with agents? You need to build agents you trust."

What You'll Learn

- Where Do You Rank as an Agentic Engineer?

- The Year of Trust: Why Models Are No Longer the Limitation

- Bet #1: Anthropic Becomes a Monster

- Bet #2: Tool Calling Is the Opportunity

- Bet #3: Custom Agents Above All

- Bet #4: Multi-Agent Orchestration Is the Next Frontier

- Bet #5: Agent Sandboxes

- Bet #6: In-Loop vs Out-Loop Agentic Coding

- Bet #7: Agentic Coding 2.0 - Agents That Conduct Agents

- Bet #8: The Benchmark Breakdown

- Bet #9: Agents Are Eating SaaS

- Bet #10: The Death of AGI Hype

- The Core Four of Agentic Engineering

- Bonus Bet: The First End-to-End Agentic Engineers Emerge

- Where to Start: Your 2026 Agentic Engineering Roadmap

Where Do You Rank as an Agentic Engineer?

Top 15%... Top 10%... Top 5%... or TOP 2%?

Where would you place yourself?

One hundred engineers walk into 2026. Ninety-eight will use AI coding tools. A handful will build custom agents. Only two will build the system that builds the systems. Guess who those two engineers are? After you finish this blog, it'll be you and me.

This post is the roadmap for becoming a top 2% agentic engineer. As you read, you'll see these ideas in your work and in the industry. The more bets you stack up and execute on, the more successful you will be. Ten concrete bets, one central thesis, and a clear answer to the only question that matters this year: Do you trust your agents?

Year of Trust: Why Models Are No Longer the Limitation

Andrej Karpathy calls the next ten years the decade of agents. But what is 2026 specifically? What is the theme that separates the top 2% of agentic engineers from everyone else?

This is the year of trust. Every bet, every prediction, every action top engineers will take comes down to one question: Do you trust your agents?

There are powerful agentic systems and agentic software engineering workflows you can build that increase the amount of work you hand off to your agents. The question is always: why haven't you pushed your agents to do more? Why haven't you automated that task you did yesterday?

The answer, every single time, is trust. You and I, we don't trust our agents. And it's not a yes-or-no question. Like most things in engineering, it's not that simple. The real question is:

"How much do you trust your agents?"

Think about a team you've worked with or a technology you've used. When there's trust, you move faster. That speed gives you more iterations on the problems you're solving. More iterations directly increase your impact.

This is why the central thesis is so powerful for thinking about how to become a top 2% agentic engineer. Every bet we'll discuss is a concrete way to increase the trust you have in your agentic systems.

Enough /init. Let's start by addressing the monster in the room: Anthropic.

Bet #1: Anthropic Becomes a Monster

Claude Code was luck. Sonnet 3.5 was luck. But everything since then has been a masterclass in execution. Anthropic is not trying to win everyone. They have been focused on one avatar: the engineer.

Look at the timeline. June 2024: Sonnet 3.5, one of the best tool calling models in existence. February 2025: Claude 3.7 and Claude Code, the big introduction to agentic coding. Then the Claude Code SDK for custom agents. Then sub-agents. Opus 4.1, Sonnet 4.5, Opus 4.5, Skills, Multi-Agent Orchestration, Opus 4.6, Sonnet 4.6, the list goes on and on and on.

Notice that this is not just about the model anymore. It is about what you and I can do with the technology. It is about trust, it is about scale. Is the model running in a good harness? Is it caching tokens for you? Can you spin up more compute from that one harness through sub-agents?

How does this tie into trust? When you bet on one company with the best track record for engineers, the best tool calling, the best agent harness, and consistent execution, your agents have the best foundation. You spend less time fighting tooling and more time shipping.

Now don't get me wrong — as much as I've hyped up, shared, demo'd, and discussed the great technology and innovation from Anthropic, I want to be clear. Anthropic is not perfect. They have a history of outages, model quality issues, controversial blocking of model usage, and more. But, as you know, if you do the most important thing right, you can come back from a lot. The best tools are still the best tools and top engineers will always bet on the best tools.

"For agentic coding, for this new realm of agentic engineering, Claude Code has been the leader. When you bet on the leader, you start the race, literally, ahead of the pack."

Bet #2: Tool Calling Is the Opportunity

Here's a simple trick question for you: Do you know what makes AI Agents so valuable? This might sound obvious, but it's not obvious to everyone and the stats show it. Most LLM users are using only a fraction of the power of the models. Let me say this concisely: Tool calling is the opportunity.

Only fifteen percent of output tokens are tool calls, according to OpenRouter. Do you understand what that means? That means 85% of the time, LLMs are being used to generate text, when they can do SO MUCH MORE. This is the greatest opportunity for engineers that will exist for the next year.

Think about what a tool call is. It is your agents taking real actions as you would. Your agents are calling bash commands, reviewing work in the browser, taking screenshots, running arbitrary skills that can call any CLI, script, or tool you build.

Tool calls are a rough proxy for impact, for actions. This is what turns the big three into the core four: context, model, prompt, tools. This is what creates agentic coding. You're not AI coding anymore. You're agentic coding. Tools are the difference.

- Tool calls roughly equal impact. The longer the sequence of correct tool calls, the more trust you have in your agents.

- Anthropic dominates tool calling. Claude 3.7, then Sonnet 4, then Opus 4.5, then Opus 4.6, then (insert new model here) -- each one took over the top spot for models that call tools on OpenRouter. The crazy thing is... this is strictly what OpenRouter reports. The real numbers go through Anthropic's API.

- Custom agents with custom tools are the best way to maximize tool calls and impact.

The question becomes: what is the best way to increase your chance of your agents calling the right long sequence of tools? The model matters, yes. But what comes next is even more important.

Bet #3: Custom Agents Above All

"Right now, there is a custom agent running somewhere, doing someone's job better than they can."

Would you believe a custom agent with fifty lines of code, three tools, and a hundred-fifty-line system prompt could completely automate a massive problem you or your team face every day?

This is the most important bet on the entire list. Custom agents solve specialized problems, and specialized problems make you a massive amount of money. This is what engineering is about. You build tailored solutions to solve specific problems that someone is willing to pay for. Custom agents unlock that opportunity with compute, prompts, and agents.

Using agentic coding tools is table stakes now. The next step up is learning to build custom agents with an agent SDK. Every big AI lab has an agent SDK. Pick one, lock it in, use it.

"One well-crafted agent can replace thousands of hours of manual work."

Let me share a dirty truth with you. In return, remember you heard it here first: Right now, there is a custom agent running somewhere, doing someone's job better than they can. It is shockingly small -- a small file, a handful of tools, one focused system prompt.

The trick is: Can you build this? Do you know what it takes? Can you prompt engineer? Can you context engineer? Do you know the leverage points of Principled AI Coding?

When you fine-tune an agent to solve one problem extraordinarily well, your trust in that agent skyrockets. It all comes back to trust. The limitation is not the model. It is our ability to put together the right context, model, prompt, and tools.

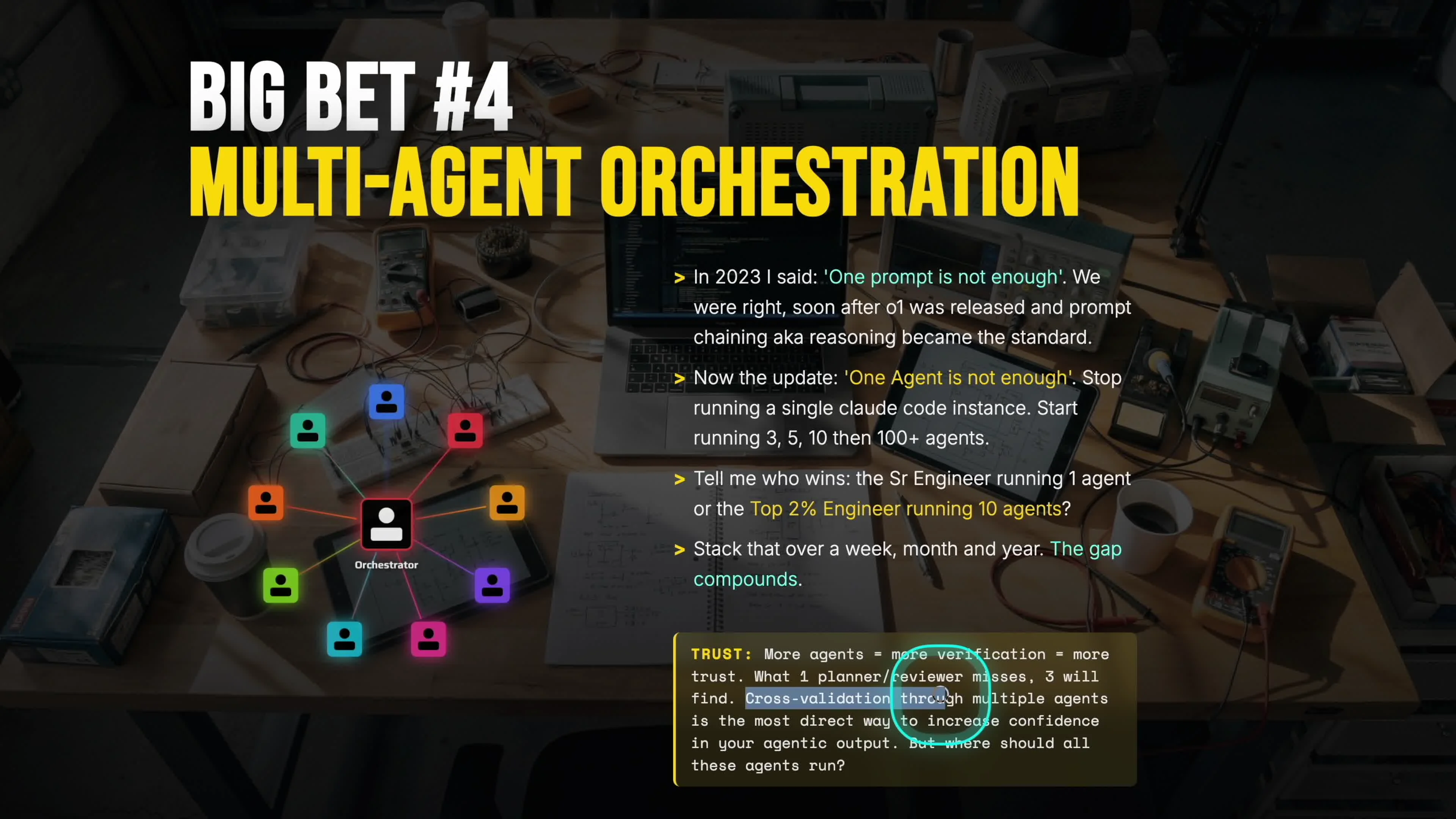

Bet #4: Multi-Agent Orchestration Is the Next Frontier

In 2023, the lesson was clear: one prompt is not enough. Then reasoning became the standard. Now, the update: one agent is not enough. Stop running a single Claude Code instance. Start running three, five, ten, hundreds.

This is one of the most important types of 'threads' we break down in Thinking in Threads , the P-Thread. Your prompts, skills, and agents can run in parallel. With parallelism, you can be in many places at once, you know this. But you also know that eventually you hit limits. We run out of mental bandwidth, and we run out of context. The next frontier: you and I need a way to coordinate our agents.

"What if we could create a single interface that could manage our agents for us?"

Tell me who wins: a senior engineer with one agent, or the top 2% running 3 teams of 10 agents? Assuming each agent is handling a real, non-trivial task.

Obviously, this is a stupid question. But an important one to emphasize. Stack up the 30 vs 1 agent scenario over a week, a month, and a year, and we are talking magnitudes of difference in output.

It's clear, the next frontier is Multi-Agent Orchestration.

This is the path of all agentic engineers. Starting from the default level to the highest levels of impact.

- First you start with a base agent - you start with the default out of the box agentic coding experience.

- Then you make it better -- you push your tool, build some skills/prompts, learn prompt engineering, and context engineering.

- Then you add more --- you add more agents, you parallelize them, open multiple terminals, and use git worktrees, etc.

- Then you customize them ---- you specialize your agents, build your own tools, build your own system prompts.

- Then you orchestrate ----- You get meta, and build a custom agent that can CRUD + Prompt other agents.

Why does this work? Because more agents give you more verification, and that gives you more trust. What one planner or reviewer will miss, three will find. You can use powerful techniques like cross-validation through multiple agents to directly increase the trust of your agentic output. Then with your orchestrator, you can delegate, monitor, and coordinate your teams of agents.

Before (Single Agent): One prompt at a time. One perspective. One chance to get it right. You babysit the agent through every step.

vs

After (Multi-Agent Orchestration): Multiple teams of agents, multiple perspectives, cross-validation. Your orchestrator delegates, monitors, and coordinates.

Running Claude Code with sub-agents was the first look at orchestrating multiple agents through an agent. Your primary agent prompts your sub-agents. This is the beginning of multi-agent orchestration.

Multi-Agent Orchestration will take many shapes. Cloud tools, terminal UIs, agent pipelines, agentic workflows, personal AI assistants (OpenClaw), and more.

My warning to engineers is this: Don't outsource your mastery of agents at scale. If you do, you'll be limited to the capabilities of the tools you use. Here's a key idea to remember.

Experiment, try tools, lean on them, but don't outsource your understanding of the MOST IMPORTANT technology for engineers in 2026 and beyond: Agents.

Once you start scaling your impact with your multi-agent systems, you'll run into the next constraint: where do you put all these agents?

Bet #5: Agent Sandboxes

As you scale up your agents, you quickly hit a practical problem: where do you put them? Are you really letting them all run rampant on your computer? A lot of vibe coders are figuring out that running agents unconstrained is how you delete your device, leak your API keys, and expose private data.

The answer is agent sandboxes. Let your agent run rampant in its own computer. The top state-of-the-art models can do this now.

High-trust agentic engineering sometimes just means having a space where even if everything goes wrong, it does not matter. This is what the dev box is. This is what the staging environment is. We are building systems of trust where only production matters.

This is the best-of-N pattern. Spin up ten agents in their own computer. Everyone gets a shot. You do not care about any one of them except the winner. You defer trust until you need it. Let the agents prove themselves.

- Parallelize and isolate both greenfield and brownfield codebases in agent sandboxes

- Lazy-load trust -- let the agent complete the work, then pull the result down when ready

- You don't need trust until the merge. Sandboxes let you defer it until you are merging back to main

Top 2% Engineers will parallelize, scale, and isolate greenfield and brownfield codebases in agent sandboxes to scale their impact and defer trust entirely.

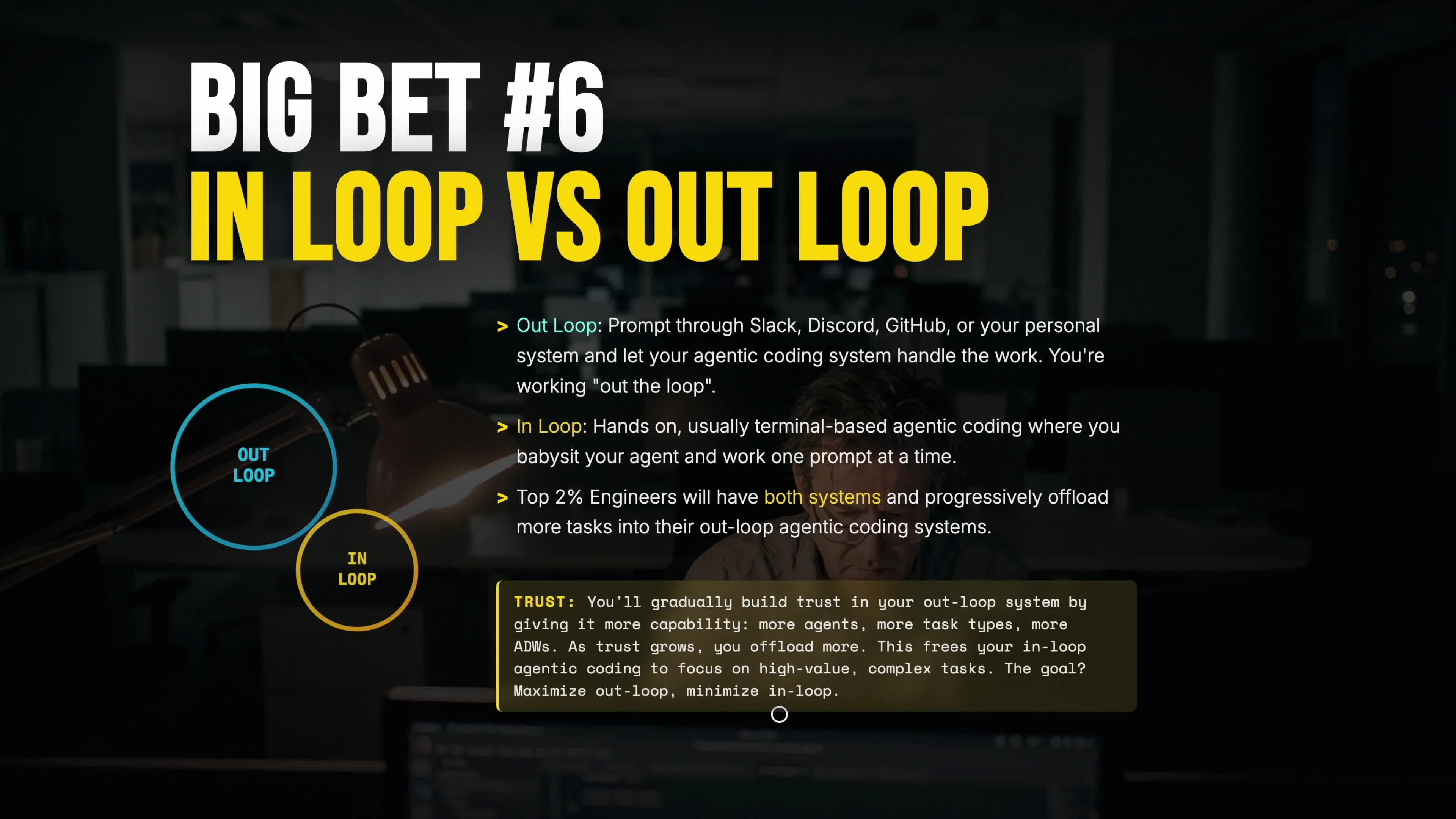

Bet #6: In-Loop vs Out-Loop Agentic Coding

There are two main types of agentic coding: in-loop and out-loop.

Out-loop is where you prompt through an external system -- Slack, Discord, GitHub, or your own personal system -- and let your agentic coding system handle the work. They submit a PR or deliver a concrete result.

In-loop is what most engineers are doing. Terminal-based agentic coding where you babysit your agent, prompting back and forth, one prompt at a time.

In-loop is powerful. But there are tasks you are doing that you do not need to be doing. Top 2% engineers will have both systems, progressively offloading more tasks into their out-loop agentic coding system.

In-Loop: Your precious engineering time in the terminal. High-value work that requires active steering. This is where you dial into the most important problems.

vs

Out-Loop: Your team of agents handling tasks autonomously. More agents, different task types, more AI developer workflows. As trust grows, you offload more work here.

The goal is to maximize out-loop and minimize in-loop. Build trust in your out-loop system gradually, and free up your in-loop time for the work that matters most.

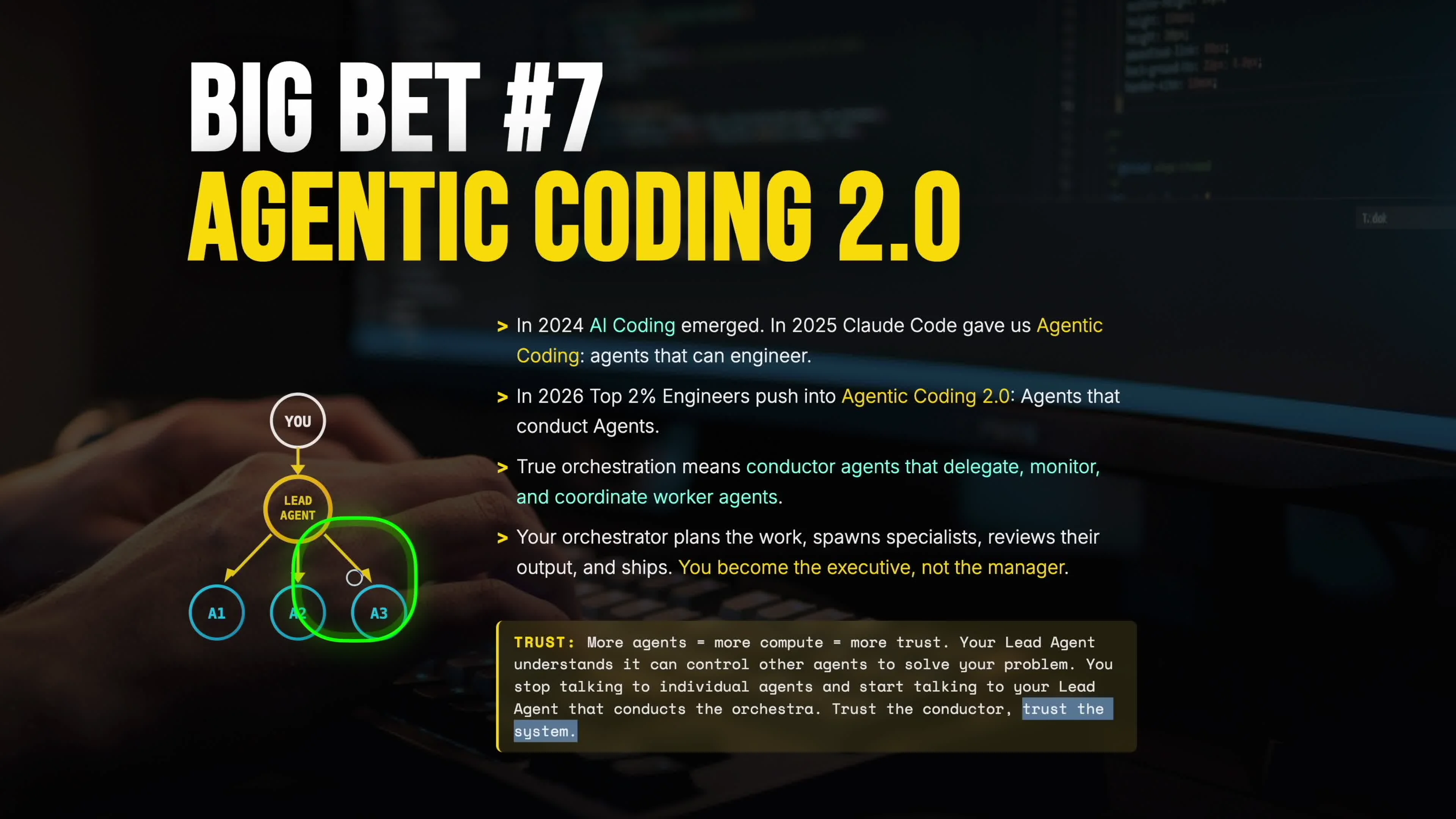

Bet #7: Agentic Coding 2.0

In 2024, AI coding emerged. Context, model, prompt -- the big three.

In 2025, Claude Code brought us agentic coding. Context, model, prompt, tools -- the core four.

In 2026, top 2% engineers will push into the next level: agentic coding 2.0. You are now talking to your lead agent. Your lead agent spins up the right agent for the job. This is not just parallelization. This is true orchestration.

Your orchestrator plans, spawns, reviews, and ships. You have a lead agent that can delegate, monitor, and coordinate command-level agents. Each worker agent has its own system prompt, its own specific playbook on how to plan, build, review, test, and document that specific work.

"You stop talking to individual agents and start talking to your lead agent that conducts the orchestra."

We are moving up the stack again. You are not even the lead engineer anymore. You are the executive, talking to your lead engineer that runs the engineering team. You trust the conductor will deliver the job for you. You trust the system you have built.

This is the convergence of everything: tool calling on top of custom agents, on top of multi-agent orchestration, on top of out-loop systems and in-loop systems. It all turns into one super system: You, your lead agents, and command-level agents that do the actual work.

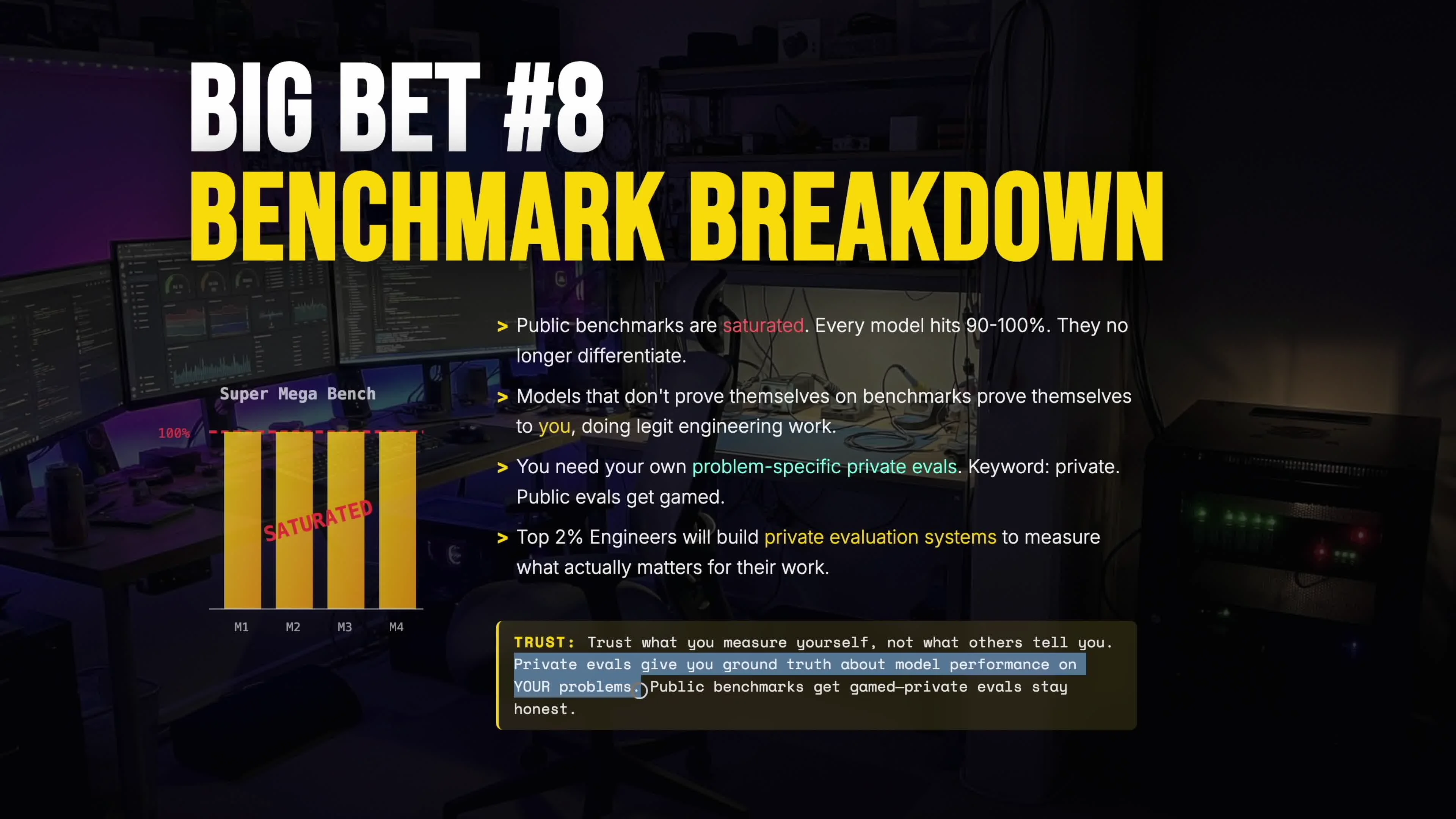

Bet #8: The Benchmark Breakdown

Public benchmarks are getting saturated. Every model hits ninety to one hundred percent. They no longer give you or me real signal. Models do not prove themselves on benchmarks. They prove themselves to you doing legitimate engineering work.

Top engineers are going to build private evaluation systems that will never become public. If you are building a company or building custom agents that do real user-facing work, you will measure it yourself.

There is very likely a model out there right now that can do the job of a custom agent you are running, and you have no idea because you do not have benchmarks. You are probably paying more for an expensive model. And when a new model drops that would give you incredible results, you will not even know it is there.

The bet: top engineers will always have private benchmarks to measure models for the use cases they care about.

Center your personal benchmark around the workflow your users and customers face — that's the real benchmark. Here's how to start:

- Build a test suite from real prompts in YOUR codebase — not synthetic examples from a leaderboard

- Evaluate models against YOUR users' actual workflows — not generic benchmarks or synthetic tasks

- That's the benchmark that matters: how well does the model handle what your customers need?

Bet #9: Agents Are Eating SaaS

Every software-as-a-service company that does not start cannibalizing itself with agents will be eaten by an agent-first competitor.

Why would you search Google and get a list of ten items when your agent can do this at absurd scale and speed? Top engineers will realize agents are the interface. Many UIs become prompt interfaces. A lot of the best UIs are going to run double-digit numbers of agents behind the scenes to accomplish highly specific tasks.

Top 2% engineers are going to stop using slow SaaS applications where you click through slow UIs to do one thing that ultimately is just CRUD on top of a database. They are going to take agents and build the minimum tooling -- not even an MCP server -- chop down the tool surface, and optimize tool calling for full control at light speed.

"Agents are the interface."

Bet #10: The Death of AGI Hype

The AGI and ASI hype is one of the greatest marketing schemes of all time. Time and time again, it has been factually untrue and used to sell headlines. Top 2% engineers stop caring about AGI promises and lock into delivering maximum value with agents.

AGI is a great North Star for deep researchers inside "AI labs". For everyone else, it is a complete waste of time. It is vaporware. It's pure hype. Focus on shipping useful software.

There is no AGI, there is no ASI. There are just agents. The best tool of engineering. Put your team of agents together. Build the best custom agents. Wire up a lead agent that coordinates the whole operation, and ship.

The Core Four of Agentic Engineering: Context, Model, Prompt, Tools

Everything is the core four. Every agent, every workflow, every bet above comes back to this fundamental truth:

- Context -- what your agent knows about your codebase, your problem, your constraints

- Model -- the intelligence engine that reasons about your problem

- Prompt -- how you communicate intent, constraints, and expectations to your agent

- Tools -- the actions your agent can take on your behalf, the bridge between reasoning and impact

In 2024, the big three was context, model, prompt. In 2025, Claude Code added tools and the big three became the core four. This is what creates agentic coding. If you understand this fundamental truth, you will be able to build and operate at the highest possible level.

Do not get baited by any feature or tool. Every new release, every new agent harness, every new paradigm is just a different composition of the core four. Understand that, and you have the mental model to evaluate anything.

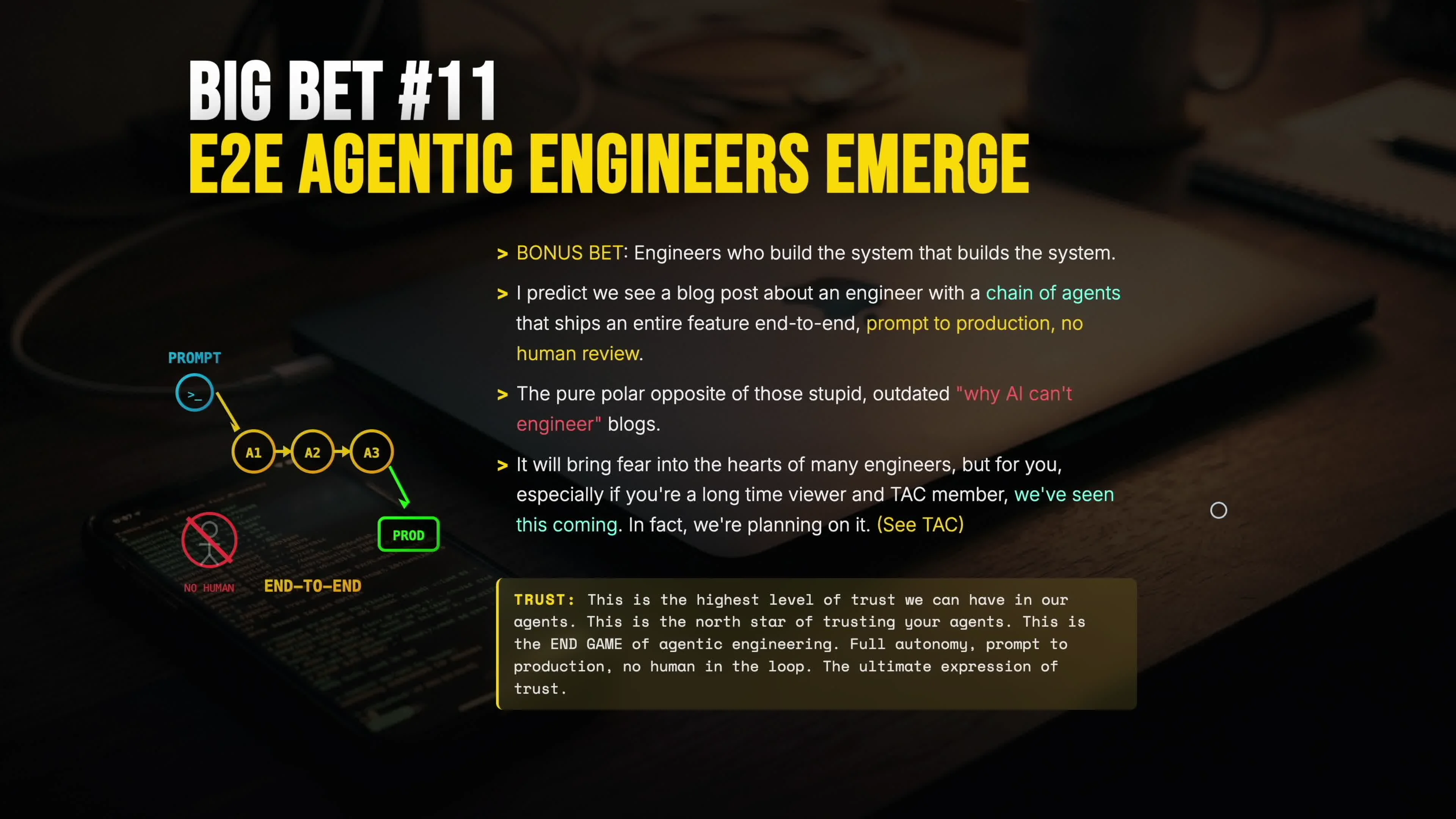

Bonus Bet: The First End-to-End Agentic Engineers Emerge

This is the edge bet. We are going to see the first engineer write a blog post that shares details about their chain of agents that ship an entire, non-trivial, revenue generating feature end to end. Prompt to production. No review. No human in the loop.

This is going to be the polar opposite of the stupid, outdated, "Why can't AI engineer?" blogs. It's going to freak people out. But for you and me, we'll have seen this coming from miles away.

In the framing of trust, this is the highest possible level. This is the North Star for trusting your agents. Full autonomy, prompt to production, no human in the loop: the ultimate expression of trust in your systems.

"Just like when your manager hands off a feature to the team, and they just disappear. They know the best teams always ship."

If you want to go deeper on all ten bets and the full trust thesis, you can watch the full video presentation where these ideas were originally presented.

Where to Start: Your 2026 Agentic Engineering Roadmap

"The trick isn't execution, it's knowing what bets to take in the first place."

In the age of agents (and the age of infinite slop), the trick isn't execution, it's knowing what bets to take in the first place. If you bet on these bets (and execute on them), you'll be on your way to becoming a top 2% agentic engineer. Don't believe me? That's fine. Do something for me real quick — take this URL and send it to your competition.

If you had to pick one bet, I'd recommend this: Custom Agents Above All. When it comes to signal, when it comes to value, this is the highest return on investment.

After that, start pushing into multi-agent orchestration and agent sandboxes. This will naturally carry you into agentic coding 2.0. Have the mindset: models do not matter anymore. There is no AGI. It's just agents. Base, Better, More, Custom, Orchestrator.

- Step 1: Build your first custom agent. Fifty lines of code, three tools, one system prompt. Solve one problem extraordinarily well. Start with the Claude Code docs.

- Step 2: Add a second agent. Start with a planner and a builder. Learn multi-agent orchestration through doing.

- Step 3: Move agents into sandboxes. Defer trust. Run the best-of-N pattern.

- Step 4: Build your out-loop system. Progressively offload work from in-loop to out-loop as trust grows.

- Step 5: Build your orchestrator. A lead agent that conducts the entire operation. This is agentic coding 2.0.

Assemble your team of agents. Craft the best custom agents you can. Promote a lead agent that orchestrates the rest. Then ship relentlessly. That is what agentic engineering is about. We're not coding anymore, we're orchestrating intelligence.

The next high leverage thing you ship should not be a feature. It should be a team of agents that will ship the feature(s) for you. This is the mindset of the next phase of engineering. You build the system that builds the system. Now, there's just one question you need to answer...

Are you ready to build your team of agents?

Stay focused and keep building.

- Dan